VMware Virtual SAN 6.0 What's New

With the announcement of vSphere 6.0, comes Virtual SAN (VSAN) 6.0 the next release of VSAN since 5.5.

This makes several improvement and I will be listing them here and do note of the differences as there are some improvement with changes to existing VSAN 5.5.

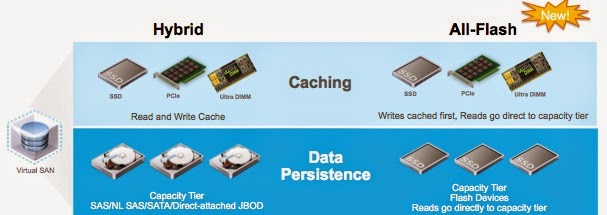

To start clear, we need to know the terms right. You will often hear Hybrid VSAN and All-Flash VSAN. Hybrid is not new. Since VSAN 5.5, it is always in Hybrid VSAN. What it means it's a mixed of Flash or Solid State Harddisk (SSD) for cache and Magnetic Disk (MD) for persistent data.

What about All-Flash, this is new in VSAN 6.0. There will be no MD but make of all Flash/SSD for flash and SSD for persistent.

In VSAN 5.5, the cache read/write ratio was 70/30. This is fixed and cannot be changed. Of course in a VDI environment, this will be less favorable though it is using flash/SDD for cache since only 30% of the cache is used for write cache in a write intensive VDI environment.

In VSAN 6.0, the cache is 100% write where read is straight from the SSD persistent area. This is definitely very favourable for any environment and workload.

Here is a table of the changes. Between VSAN 6.0 Hybrid and All-Flash is only the IOPS different.

This makes several improvement and I will be listing them here and do note of the differences as there are some improvement with changes to existing VSAN 5.5.

To start clear, we need to know the terms right. You will often hear Hybrid VSAN and All-Flash VSAN. Hybrid is not new. Since VSAN 5.5, it is always in Hybrid VSAN. What it means it's a mixed of Flash or Solid State Harddisk (SSD) for cache and Magnetic Disk (MD) for persistent data.

What about All-Flash, this is new in VSAN 6.0. There will be no MD but make of all Flash/SSD for flash and SSD for persistent.

source: VMware, Inc

In VSAN 5.5, the cache read/write ratio was 70/30. This is fixed and cannot be changed. Of course in a VDI environment, this will be less favorable though it is using flash/SDD for cache since only 30% of the cache is used for write cache in a write intensive VDI environment.

In VSAN 6.0, the cache is 100% write where read is straight from the SSD persistent area. This is definitely very favourable for any environment and workload.

Here is a table of the changes. Between VSAN 6.0 Hybrid and All-Flash is only the IOPS different.

Virtual SAN 5.5

|

Virtual SAN 6.0

Hybrid

|

Virtual SAN 6.0

All-Flash |

|

Hosts per Cluster

|

32

|

64

|

64

|

VMs per Host

|

100

|

200

|

200

|

IOPS per Host

|

20K

|

40K

|

90K

|

Snapshot depth

per VM

|

2

|

32

|

32

|

Virtual Disk size

|

2TB

|

62TB

|

62TB

|

Virtual Machine per Cluster

|

3200

|

6400

|

6400

|

Components per host

|

3000

|

9000

|

9000

|

less than 10% of CPU utilization.

With the announced of VSAN 6.0 which will include VSAN 5.5 will support Direct Attached JBODs for Blade servers. This will allow Blade servers which have little or no disk to scale with such storage and used in a VSAN setup.

VSAN is supported in self build servers from the HCL list or via VSAN Ready nodes which comes with all the parts already certified to run or available through EVO:RAIL.

Use Cases

- In VSAN 5.5, it was mostly suitable for DR, Test/Dev, VDI. However in VSAN 6.0, it is now added with one more which is Business Critical Applications (BCA). This is made possible due to 1) All-Flash, 2) New disk format which will results in better performance.

- The Ethernet for Hybrid VSAN network required is at least 1GB Ethernet but recommended to use 10GB. For All-Flash VSAN, 10GB is a requirement.

- Minimum hosts is still three for VSAN 6.0. However I would recommend a N+1 which is four hosts to start.

- Minimum one disk group per host. One Flash/SDD for cache and One MD/SSD for persistent data per disk group.

- Maximum of 7 MD/SSD for persistent data.

- Maximum of 5 diskgroup.

- VSAN 6.0 uses VSAN FS as the new file format vs VMFS-L in VSAN 5.5. Online migration via RVC Console is supported. VSAN 6.0 will still support VMFS-L even if you choose not to upgrade.

- VSAN 5.5 uses vmfsSparse (redo logs) for snapshot while VSAN 6.0 uses vsanSparse. Read more of vmfsSparse here.

- Note that once VSAN 6.0 file format is used, vSphere 5.5 will not be able to join the VSAN cluster.

In VSAN 5.5, we were unable to control where each nodes in the VSAN cluster will be e.g. in a 10 hosts clusters, 5 nodes are sharing the same power supply i.e. same rack and the other 5 nodes on another power supply i.e different rack. This helps also help in network partitioning where the bigger Fault Domain will win when separated.

With VSAN 6.0, we are able to create Fault Domain where we can grouping a VSAN cluster into different logical failure zones. This allows VSAN to understand and ensure provision of replica will not fall into the same logical failure zones.

This is extremely useful as in the past, without fault domain we could need more resources to create a workaround. Assuming FTT=2, you will need at least 5 nodes for 3 replicas and 2 witness. As illustrate below, without logically creating a Fault Domain, there are actually 6 Fault Domains due to the number of servers and in such, even though two nodes are in the same rack its actually 2 Fault Domains to VSAN so resulting replica be still be placed in the same rack so creating FTT=2 ensure at least one replica is on a separate Fault Domain.

source: VMware, Inc

With the new Fault Domain, we can maintain the default FTT=1 and ensure at least one replica will be in each separate Fault Domain to achieve redundancy as shown below. Not only does this allows even the minimum of 3 nodes, it also uses less resources.

source: VMware, Inc

Comments